Chunks

A Chunk is the core datastructure at the heart of Rerun: it dictates how data gets logged, injected, stored, and queried. A basic understanding of chunks is important in order to understand why and how Rerun and its APIs work the way they work.

How Rerun stores data how-rerun-stores-data

All the data you send into Rerun is stored in chunks, always.

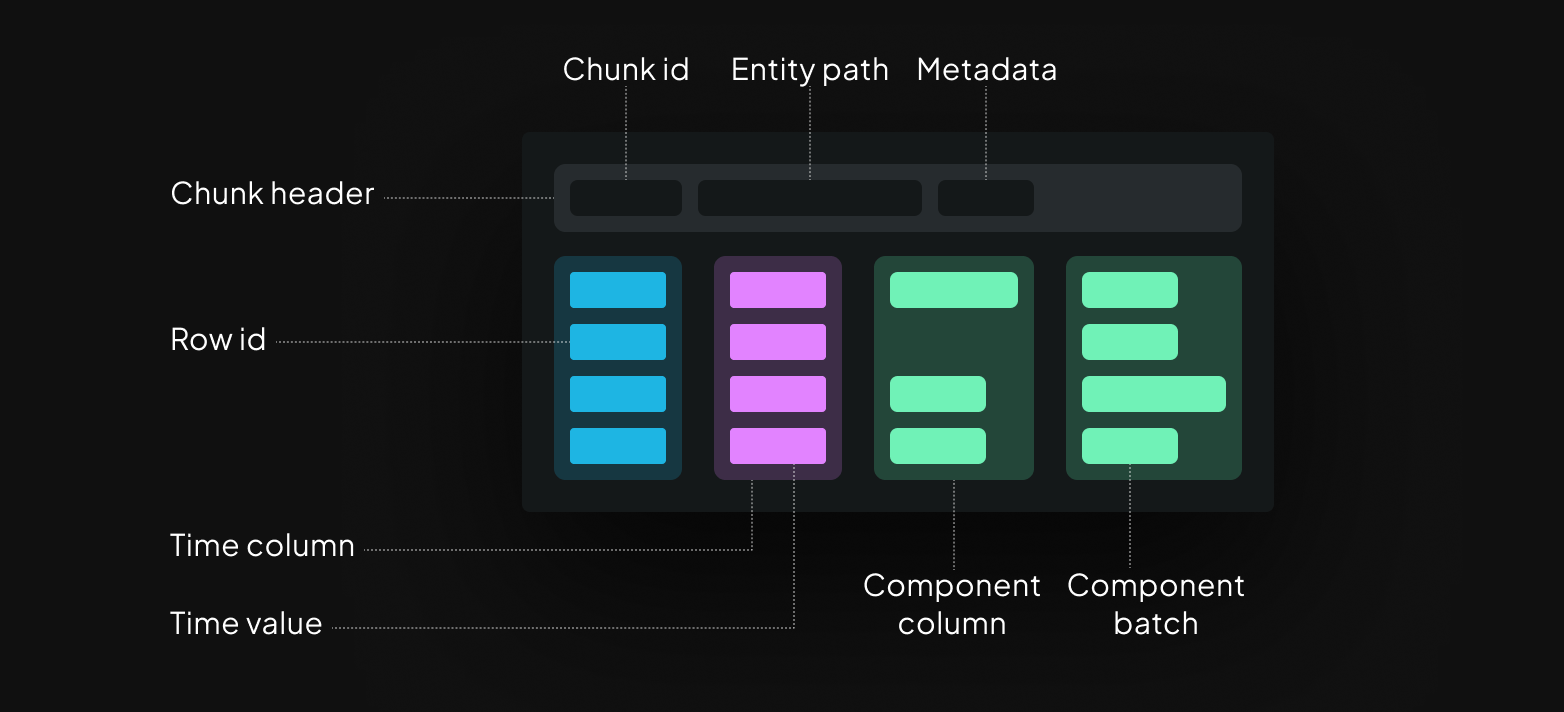

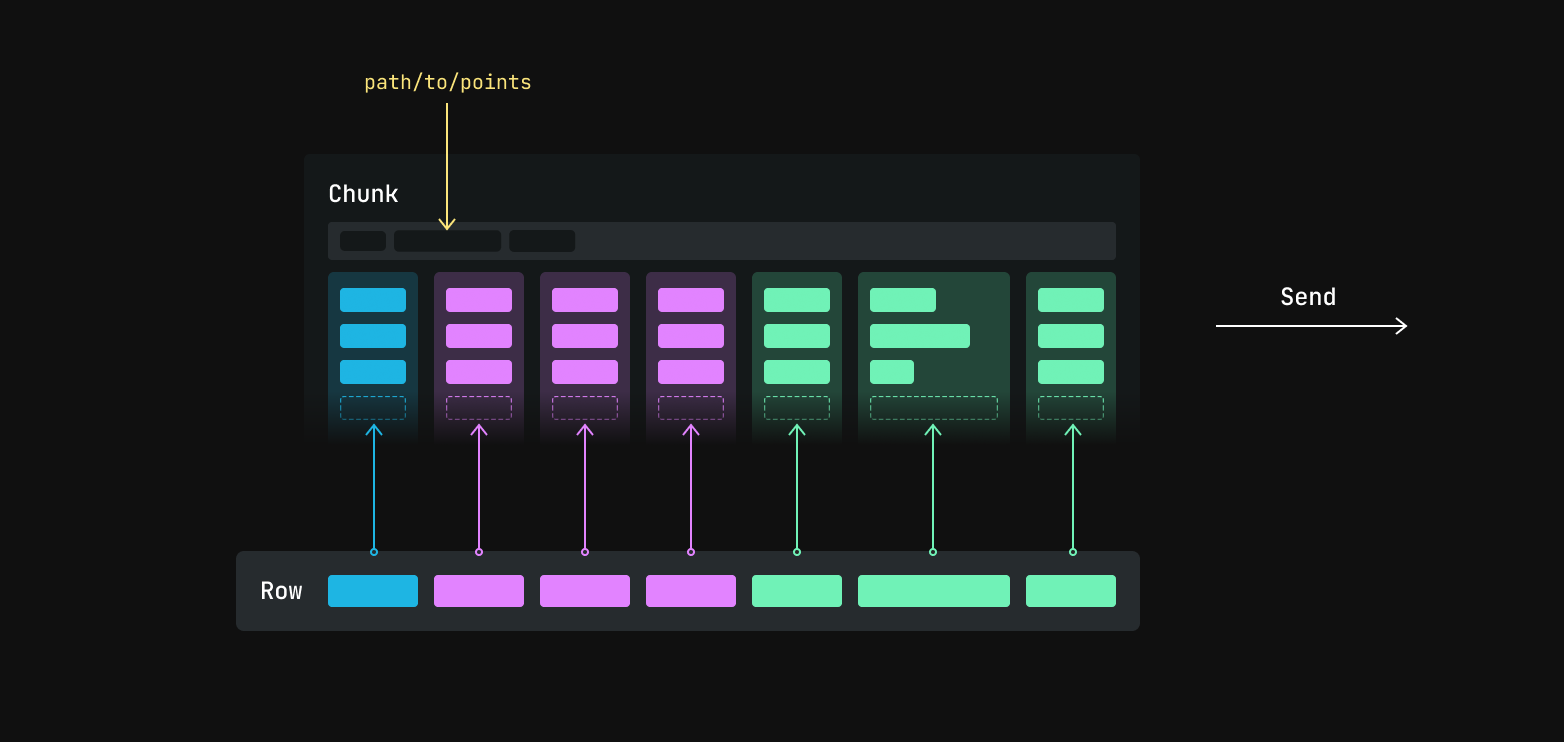

A chunk is an Arrow-encoded, column-oriented table of binary data:

A Component Column contains one or more Component Batches, which in turn contain one or more instances (that is, a component is always an array). Each component batch corresponds to a single Row ID and one time point per timeline.

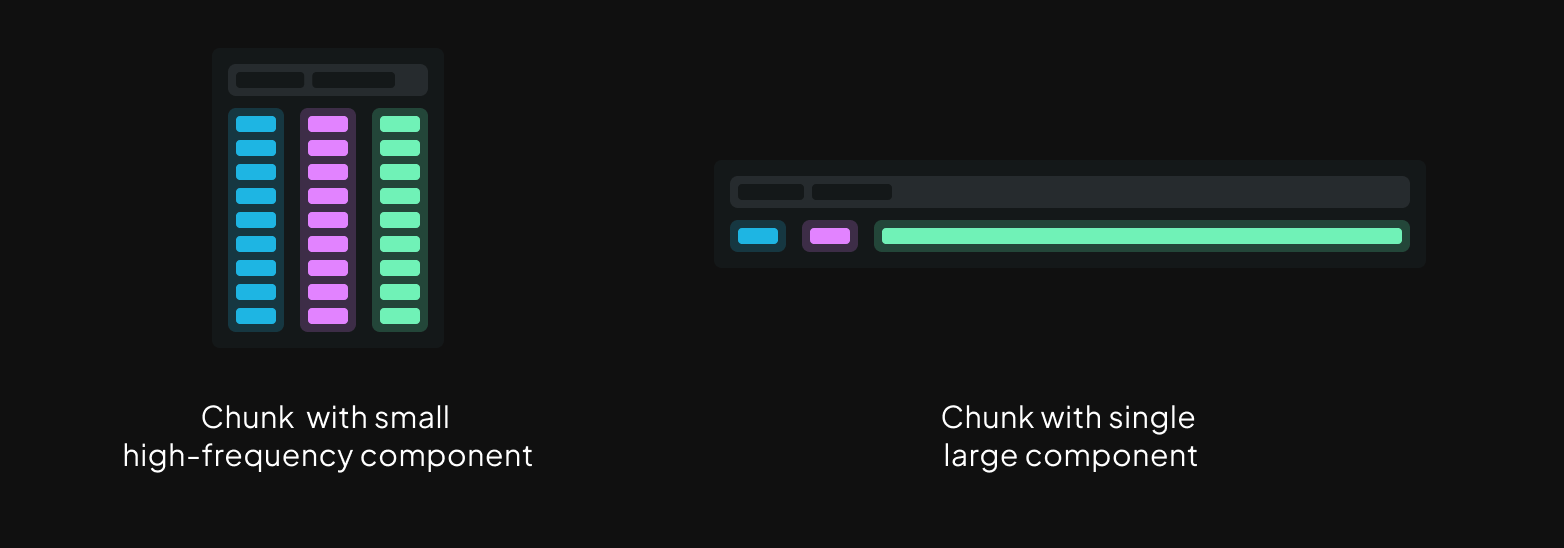

This design allows for keeping chunks within a target size range, even for recordings that combine low frequency but large data like point clouds or tensors (wide columns), with high frequency but small signals (tall columns).

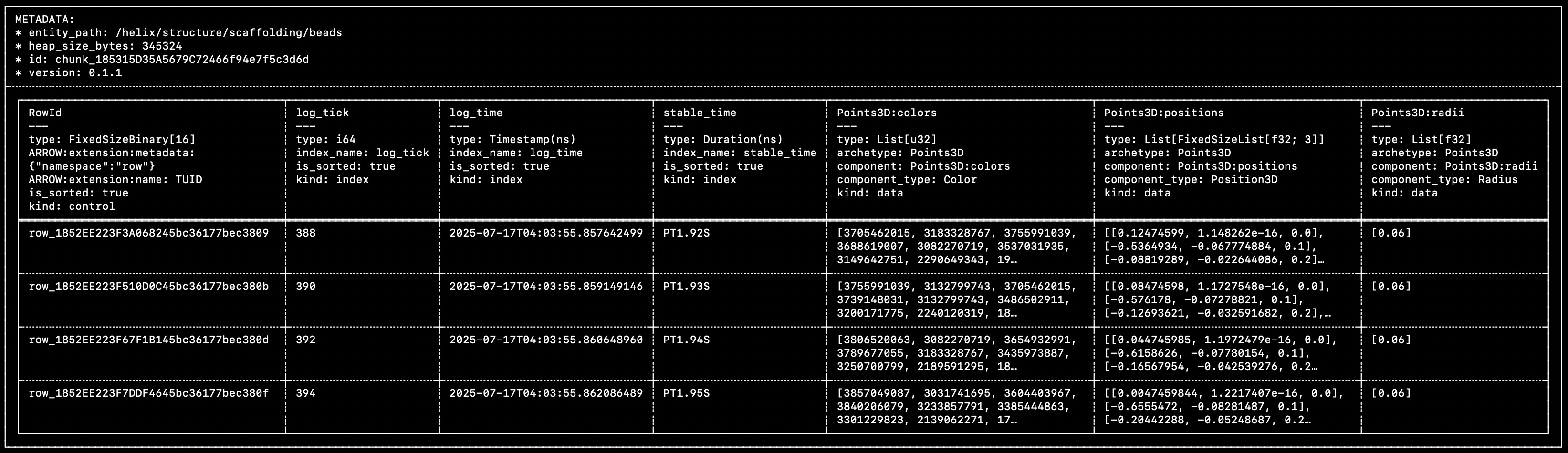

Here's an excerpt from a real-world chunk (taken from the Helix example) (you might want to open this image in a new tab):

You can see that this matches very closely the diagram above:

- A single control column, that contains the globally unique row IDs.

- Multiple time/index columns (

log_tick,log_time,stable_time). - Multiple component columns (

Points3D:colors,Points3D:positions,Points3D:radii).

Within each row of each component column, the individual cells are Component Batches. Component batches are the atomic unit of data in Rerun.

The data in this specific chunk was logged with the following code:

rr.set_time("stable_time", duration=time)

beads = [bounce_lerp(points1[n], points2[n], times[n]) for n in range(NUM_POINTS)]

colors = [[int(bounce_lerp(80, 230, times[n] * 2))] for n in range(NUM_POINTS)]

rr.log(

"helix/structure/scaffolding/beads",

rr.Points3D(beads, radii=0.06, colors=np.repeat(colors, 3, axis=-1))

)

You can learn more about chunks and how they came to be in this blog post.

Getting chunks into Rerun getting-chunks-into-rerun

If you've used the Rerun SDK before, you know it doesn't actually force to manually craft these chunks byte by byte - that would be rather cumbersome!

How does one create and store chunks in Rerun, then?

The row-oriented way: log the-roworiented-way-log

The log API is generally what we show in the getting-started guides since it's the easiest to use:

"""

Update a scalar over time.

See also the `scalar_column_updates` example, which achieves the same thing in a single operation.

"""

from __future__ import annotations

import math

import rerun as rr

rr.init("rerun_example_scalar_row_updates", spawn=True)

for step in range(64):

rr.set_time("step", sequence=step)

rr.log("scalars", rr.Scalars(math.sin(step / 10.0)))

The log API makes it possible to send data into Rerun on a row-by-row basis, without requiring any extra effort.

This row-oriented interface makes it very easy to integrate into existing codebase and just start logging data as it comes (hence the name).

Reference:

But if you're handing a bunch of rows of data over to Rerun, how does it end up neatly packaged in columnar chunks?

How are these rows turned into columns?

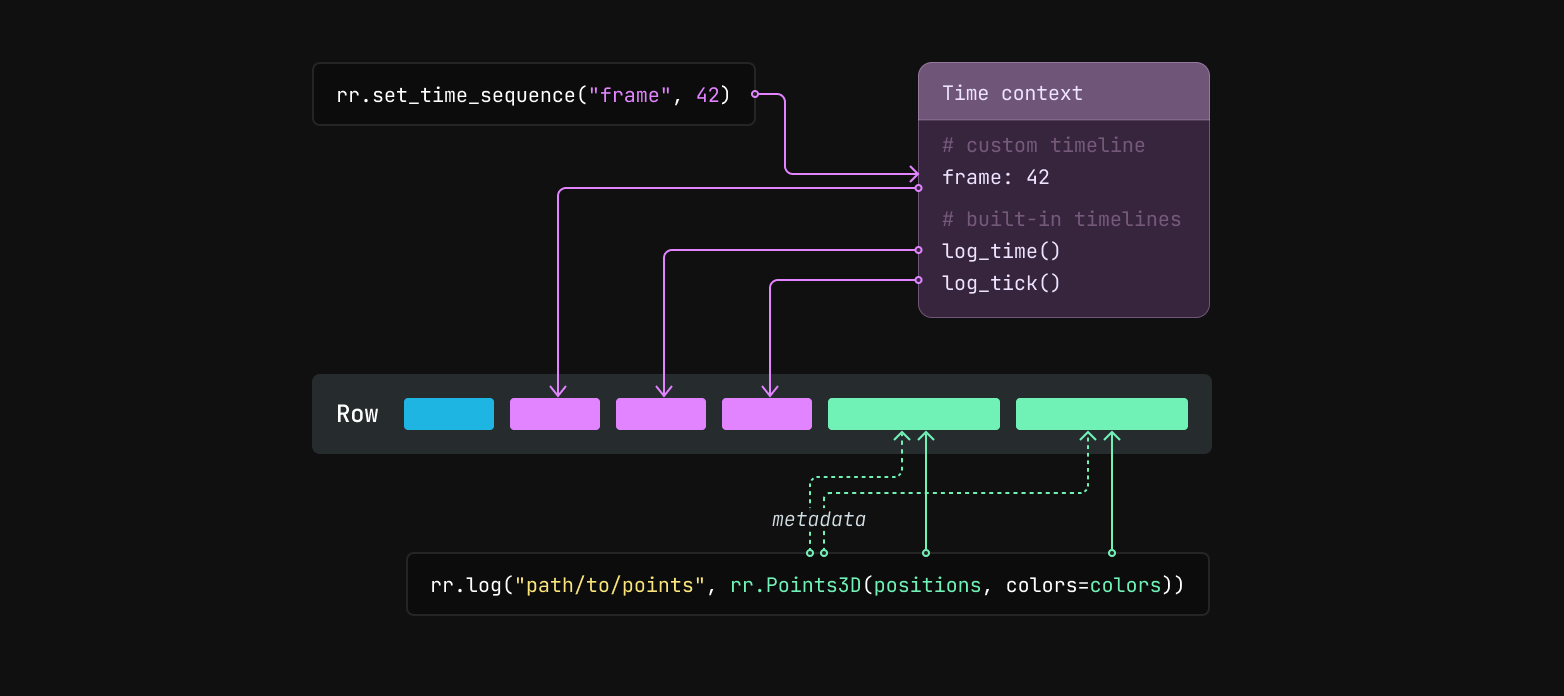

Before logging data, you can use the rr.set_time_ APIs to update the SDK's time context with timestamps for custom timelines.

For example, rr.set_time("frame", sequence=42) will set the "frame" timeline's current value to 42 in the time context.

When you later call rr.log, the SDK will generate a row id and values for the built-in timelines log_time and log_tick.

It will also grab the current values for any custom timelines from the time context.

Any data passed to rr.log or rr.log_components becomes component batches.

The row id, timestamps, and logged component batches are then encoded as Apache Arrow arrays and together make up a row. That row is then passed to a batcher, which appends the values from the row to the current chunk for the entity path.

The current chunk is then sent to its destination, either periodically or as soon as it crosses a size threshold. Building up small column chunks before sending from the SDK trades off a small amount of latency and memory use in favor of more efficient transfer and ingestion. You can read about how to configure the batcher here.

The column-oriented way: send_columns the-columnoriented-way-sendcolumns

The log API showcased above is designed to extract data from your running code as it's being generated. It is, by nature, row-oriented.

If you already have data stored in something more column-oriented, it can be both a lot easier and more efficient to send it to Rerun in that form directly.

This is what the send_columns API is for: it lets you efficiently update the state of an entity over time, sending data for multiple index and component columns in a single operation.

⚠️

send_columnsAPI bypasses the time context and micro-batcher ⚠️In contrast to the

logAPI,send_columnsdoes NOT add any other timelines to the data. Neither the built-in timelineslog_timeandlog_tick, nor any user timelines. Only the timelines explicitly included in the call tosend_columnswill be included.

"""

Update a scalar over time, in a single operation.

This is semantically equivalent to the `scalar_row_updates` example, albeit much faster.

"""

from __future__ import annotations

import numpy as np

import rerun as rr

rr.init("rerun_example_scalar_column_updates", spawn=True)

times = np.arange(0, 64)

scalars = np.sin(times / 10.0)

rr.send_columns(

"scalars",

indexes=[rr.TimeColumn("step", sequence=times)],

columns=rr.Scalars.columns(scalars=scalars),

)

See also the reference: