Hand tracking and gesture recognition

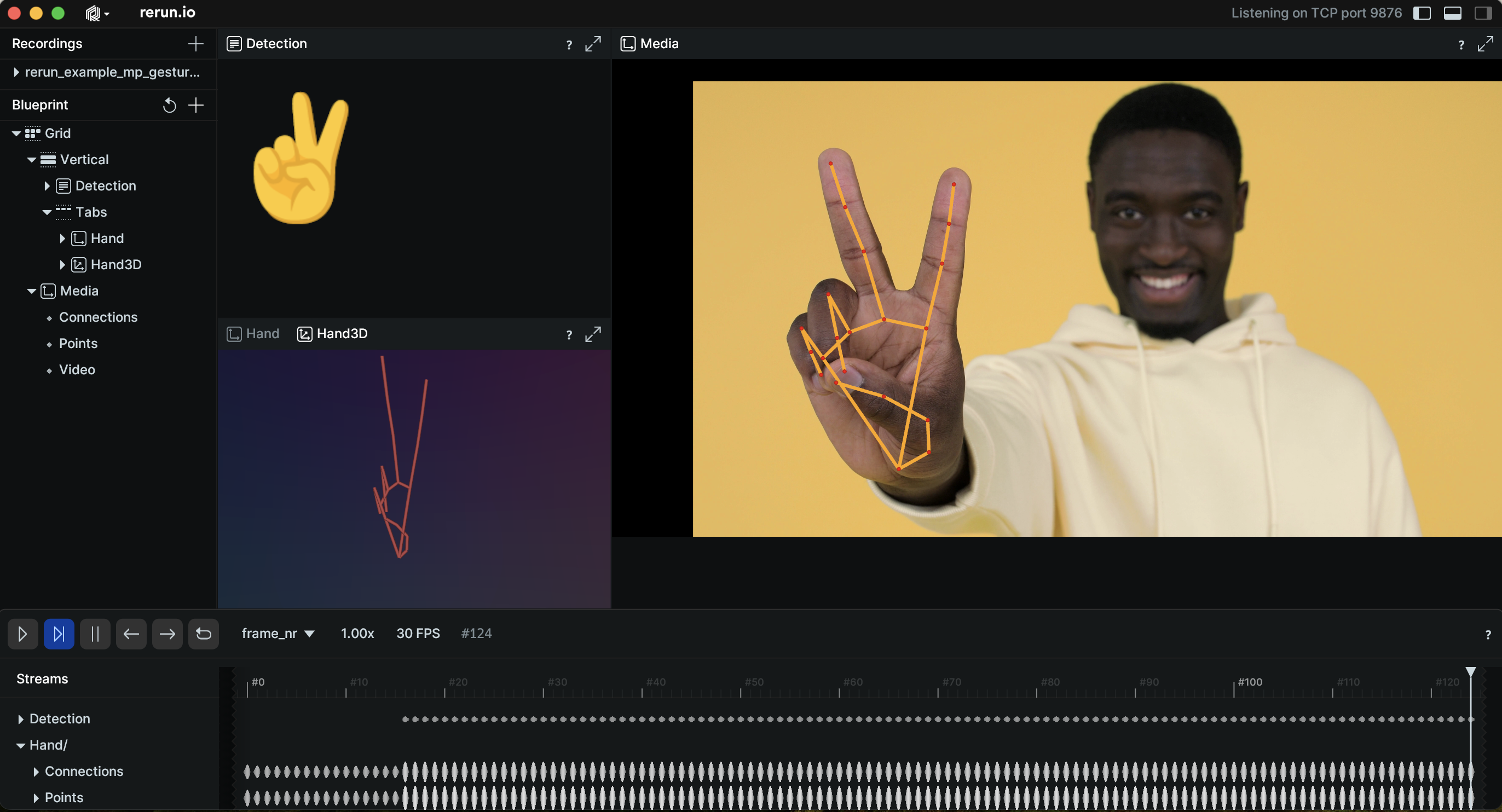

Use the MediaPipe Hand Landmark and Gesture Detection solutions to track hands and recognize gestures in images, video, and camera stream.

Used Rerun types used-rerun-types

Image, Points2D, Points3D, LineStrips2D, ClassDescription, AnnotationContext, TextDocument

Background background

The hand tracking and gesture recognition technology aims to give the ability of the devices to interpret hand movements and gestures as commands or inputs. At the core of this technology, a pre-trained machine-learning model analyses the visual input and identifies hand landmarks and hand gestures. The real applications of such technology vary, as hand movements and gestures can be used to control smart devices. Human-Computer Interaction, Robotics, Gaming, and Augmented Reality are a few of the fields where the potential applications of this technology appear most promising.

In this example, the MediaPipe Gesture and Hand Landmark Detection solutions were utilized to detect and track hand landmarks and recognize gestures. Rerun was employed to visualize the output of the Mediapipe solution over time to make it easy to analyze the behavior.

Logging and visualizing with Rerun logging-and-visualizing-with-rerun

The visualizations in this example were created with the following Rerun code.

Timelines timelines

For each processed video frame, all data sent to Rerun is associated with the two timelines time and frame_idx.

rr.set_time("frame_nr", sequence=frame_idx)

rr.set_time("frame_time", duration=1e-9 * frame_time_nano)Video video

The input video is logged as a sequence of Image objects to the Media/Video entity.

rr.log(

"Media/Video",

rr.Image(frame).compress(jpeg_quality=75)

)Hand landmark points hand-landmark-points

Logging the hand landmarks involves specifying connections between the points, extracting pose landmark points and logging them to the Rerun SDK. The 2D points are visualized over the video and at a separate entity. Meanwhile, the 3D points allows the creation of a 3D model of the hand for a more comprehensive representation of the hand landmarks.

The 2D and 3D points are logged through a combination of two archetypes.

For the 2D points, the Points2D and LineStrips2D archetypes are utilized. These archetypes help visualize the points and connect them with lines, respectively.

As for the 3D points, the logging process involves two steps. First, a static ClassDescription is logged, that contains the information which maps keypoint ids to labels and how to connect

the keypoints. Defining these connections automatically renders lines between them. Mediapipe provides the HAND_CONNECTIONS variable which contains the list of (from, to) landmark indices that define the connections.

Second, the actual keypoint positions are logged in 3D Points3D archetype.

Label mapping and keypoint connections

rr.log(

"/",

rr.AnnotationContext(

rr.ClassDescription(

info=rr.AnnotationInfo(id=0, label="Hand3D"),

keypoint_connections=mp.solutions.hands.HAND_CONNECTIONS,

)

),

static=True,

)

rr.log("Hand3D", rr.ViewCoordinates.LEFT_HAND_Y_DOWN, static=True)2D points

# Log points to the image and Hand entity

for log_key in ["Media/Points", "Hand/Points"]:

rr.log(

log_key,

rr.Points2D(points, radii=10, colors=[255, 0, 0])

)

# Log connections to the image and Hand entity [128, 128, 128]

for log_key in ["Media/Connections", "Hand/Connections"]:

rr.log(

log_key,

rr.LineStrips2D(np.stack((points1, points2), axis=1), colors=[255, 165, 0])

)3D points

rr.log(

"Hand3D/Points",

rr.Points3D(

landmark_positions_3d,

radii=20,

class_ids=0,

keypoint_ids=[i for i in range(len(landmark_positions_3d))],

),

)Detection detection

To showcase gesture recognition, an image of the corresponding gesture emoji is displayed within a TextDocument under the Detection entity.

# Log the detection by using the appropriate image

rr.log(

"Detection",

rr.TextDocument(f"".strip(), media_type=rr.MediaType.MARKDOWN),

)Run the code run-the-code

To run this example, make sure you have the Rerun repository checked out and the latest SDK installed:

pip install --upgrade rerun-sdk # install the latest Rerun SDK

git clone git@github.com:rerun-io/rerun.git # Clone the repository

cd rerun

git checkout latest # Check out the commit matching the latest SDK releaseInstall the necessary libraries specified in the requirements file:

pip install -e examples/python/gesture_detectionTo experiment with the provided example, simply execute the main Python script:

python -m gesture_detection # run the exampleIf you wish to customize it for various videos, adjust the maximum frames, explore additional features, or save it use the CLI with the --help option for guidance:

$ python -m gesture_detection --help