Depth guided stable diffusion

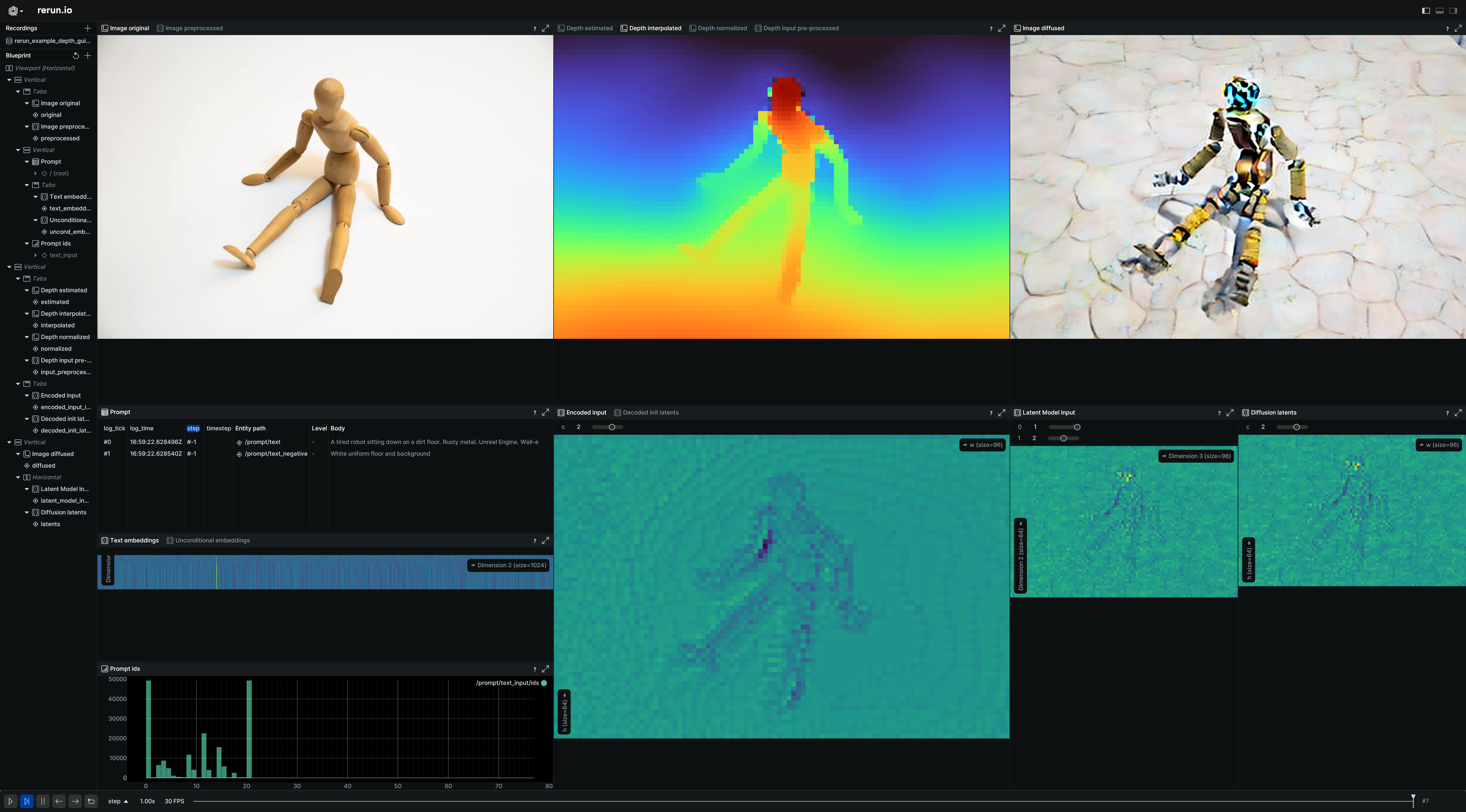

Leverage Depth Guided Stable Diffusion to generate images with enhanced depth perception. This method integrates depth maps to guide the Stable Diffusion model, creating more visually compelling and contextually accurate images.

Used Rerun types used-rerun-types

Image, Tensor, DepthImage, TextDocument,TextLogBarChart

Background background

Depth Guided Stable Diffusion enriches the image generation process by incorporating depth information, providing a unique way to control the spatial composition of generated images. This approach allows for more nuanced and layered creations, making it especially useful for scenes requiring a sense of three-dimensionality.

Logging and visualizing with Rerun logging-and-visualizing-with-rerun

The visualizations in this example were created with the Rerun SDK, demonstrating the integration of depth information in the Stable Diffusion image generation process. Here is the code for generating the visualization in Rerun.

Prompt prompt

Visualizing the prompt and negative prompt

rr.log("prompt/text", rr.TextLog(prompt))

rr.log("prompt/text_negative", rr.TextLog(negative_prompt))Text text

Visualizing the text input ids, the text attention mask and the unconditional input ids

rr.log("prompt/text_input/ids", rr.BarChart(text_input_ids))

rr.log("prompt/text_input/attention_mask", rr.BarChart(text_inputs.attention_mask))

rr.log("prompt/uncond_input/ids", rr.Tensor(uncond_input.input_ids))Text embeddings text-embeddings

Visualizing the text embeddings (i.e., numerical representation of the input texts) from the prompt and negative prompt.

rr.log("prompt/text_embeddings", rr.Tensor(prompt_embeds))

rr.log("prompt/negative_text_embeddings", rr.Tensor(negative_prompt_embeds))Depth map depth-map

Visualizing the pixel values of the depth estimation, estimated depth image, interpolated depth image and normalized depth image

rr.log("depth/input_preprocessed", rr.Tensor(pixel_values))

rr.log("depth/estimated", rr.DepthImage(depth_map))

rr.log("depth/interpolated", rr.DepthImage(depth_map))

rr.log("depth/normalized", rr.DepthImage(depth_map))Latents latents

Log the latents, the representation of the images in the format used by the diffusion model.

rr.log("diffusion/latents", rr.Tensor(latents, dim_names=["b", "c", "h", "w"]))Denoising loop denoising-loop

For each step in the denoising loop we set a time sequence with step and timestep and log the latent model input, noise predictions, latents and image. This make is possible for us to see all denoising steps in the Rerun viewer.

rr.set_time("step", sequence=i)

rr.set_time("timestep", sequence=t)

rr.log("diffusion/latent_model_input", rr.Tensor(latent_model_input))

rr.log("diffusion/noise_pred", rr.Tensor(noise_pred, dim_names=["b", "c", "h", "w"]))

rr.log("diffusion/latents", rr.Tensor(latents, dim_names=["b", "c", "h", "w"]))

rr.log("image/diffused", rr.Image(image))Diffused image diffused-image

Finally we log the diffused image generated by the model.

rr.log("image/diffused", rr.Image(image_8))Run the code run-the-code

To run this example, make sure you have the Rerun repository checked out and the latest SDK installed:

pip install --upgrade rerun-sdk # install the latest Rerun SDK

git clone git@github.com:rerun-io/rerun.git # Clone the repository

cd rerun

git checkout latest # Check out the commit matching the latest SDK releaseInstall the necessary libraries specified in the requirements file:

pip install -e examples/python/depth_guided_stable_diffusionTo run this example use

python -m depth_guided_stable_diffusionYou can specify your own image and prompts using

python -m depth_guided_stable_diffusion [--img-path IMG_PATH] [--depth-map-path DEPTH_MAP_PATH] [--prompt PROMPT]