Entity Filtering & Partial Update APIs

Rerun is building the multimodal data stack for teams that build Physical AI.

The 0.22 open source release brings entity filtering for finding data faster in the Viewer, significantly simplified APIs for partial & columnar updates and numerous other enhancements.

Viewer entity filtering viewer-entity-filtering

One of the biggest improvements in this release is entity filtering. When working with complex scenes, you need a way to quickly find what matters. With the new filtering and search capabilities, you finally can!

Filtering works in both the Blueprint and Streams panels, supports full paths and can match multiple keywords simultaneously.

Improved partial & columnar updates improved-partial--columnar-updates

We've made it much easier to update only the parts of your data that change. With this release, partial updates of archetypes are now significantly simpler across Python, Rust, and C++.

Instead of requiring knowledge of the Rerun entity-component system internals, you can perform updates directly on the archetype:

# NEW:

rr.log("points", rr.Points3D.from_fields(colors=new_colors, radii=new_radii))

# OLD:

rr.log("points", [rr.components.ColorBatch(new_colors), rr.components.RadiusBatch(new_radii)])Not only is this more intuitive, but under the hood it also allows us to tag all components with their archetype & field name. In the future this will allow you to log arbitrary archetypes on the same path without overriding data. As we gradually embrace tagged components everywhere, all entry points that didn't allow for tagging are getting deprecated or removed. Check our 🔗 Migration guide for 0.22 for details and examples.

Similarly, the send_column API for sending data with multiple timestamps at once has been revamped to work directly with archetypes:

# NEW:

rr.send_columns(

"points",

indexes=[rr.TimeSecondsColumn("time", times)],

columns=[

*rr.Points3D.columns(positions=positions).partition([2, 4, 4, 3, 4]),

*rr.Points3D.columns(colors=colors, radii=radii),

],

)

# OLD:

rr.send_columns(

"points",

times=[rr.TimeSecondsColumn("time", times)],

components=[

rr.Points3D.indicator(),

rr.components.Position3DBatch(np.concatenate(positions)).partition([2, 4, 4, 3, 4]),

rr.components.ColorBatch(colors),

rr.components.RadiusBatch(radii),

],

)Note that you no longer need to supply indicator components manually. They are now automatically generated under-the-hood, and will soon be phased out in favor of tagged components.

Relatedly, all SDKs now serialize data to Arrow eagerly at archetype instantiation. This was so far only done by the Python SDK. This paves the way for generic component types in the future. It also fixes a long standing issue in our C++ API where some archetypes would only store pointers to supplied data. In turn, this could easily cause segfaults if the archetype was used after freeing the underlying data.

Programmatic control over the Viewer on the Web & Jupyter Notebooks programmatic-control-over-the-viewer-on-the-web--jupyter-notebooks

We're introducing new ways to programmatically manage the state of the Viewer. While Blueprints already provide extensive control over many aspects of the Viewer, certain elements—like timeline position and panel states—aren't captured by the Blueprint model.

To fill this gap, we're introducing a dedicated API channel for managing UI state, starting with the Web Viewer. This new capability enables dynamic interactions with the viewer when embedded in web pages—included Jupyter notebooks.

Fine-grained Time Control finegrained-time-control

You can dynamically adjust the playback time, pause, and resume recordings via code. This means it is now possible to synchronize the Viewer timeline with external tools.

Panel State Management panel-state-management

The top, bottom, and side panels can now be programmatically collapsed or expanded. This is particularly useful for tailoring the interface to specific tasks, or reducing the number of visible elements in the UI when the available space is limited.

Notification panel notification-panel

We've introduced a new panel for notifications. Instead of only having a short time to read a notification before it disappears, they are now stored until you dismiss them, so important errors and warnings no longer get lost.

Range selections with shift-click range-selections-with-shiftclick

The blueprint and streams panels now support range selection using shift-click, which speeds up bulk operations like adding entities to views.

More highlights in short more-highlights-in-short

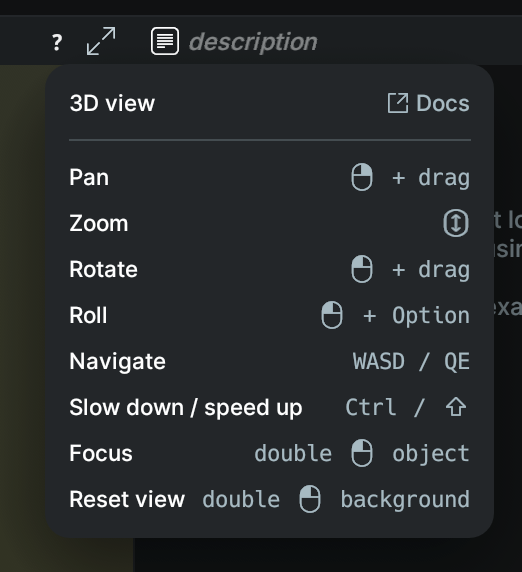

- Improved view help

Condensed navigation info & links to the docs.

- Clickable links in custom components

Any URL in any component is now clickable in the Viewer. - Crisper UI rendering

With the latest egui version comes much sharper UI rendering. - Faster transforms

We still have a lot of work to do to make Rerun faster with many entities, but, thanks to a new transform cache, scenes with many transforms perform up to twice as fast. - More example snippets

Check the snippet index for an exhaustive list of all our bite-sized examples.

And many other bugfixes and improvements. For more details, check the changelog.

Try it out and let us know what you think try-it-out-and-let-us-know-what-you-think

We're really excited to hear how these new UX and API improvements work for you. There will be more coming on all fronts and we'd love input from the community on what to prioritize next.

Join us on Github or Discord and show us what you've built, let us know what you think, and what you hope to see in the future.